Creating 3D objects for building game scenes, virtual worlds, including metaverse, product design, or visual effects has traditionally been a meticulous process, where skilled artists balance detail and photorealism with timelines. and budget pressures.

It takes a long time to create something that looks and acts like it would in the physical world. And the problem becomes more difficult when several objects and characters must interact in a virtual world. Simulating physics becomes just as important as simulating light. A robot in a virtual factory, for example, must not only look the same, but also have the same weight capacity and braking ability as its physical counterpart.

It’s hard. But the opportunities are enormous, affecting trillion-dollar industries as varied as transportation, healthcare, telecommunications and entertainment, in addition to product design. Ultimately, more content will be created in the virtual world than in the physical world.

To simplify and shorten this process, NVIDIA today released new research and a broad suite of tools that apply the power of neural graphics to the creation and animation of 3D objects and worlds.

These SDKs, including NeuralVDB, a revolutionary update to the industry standard OpenVDB, and Kaolin Wisp, a Pytorch library establishing a framework for neural field research, make the creative process easier for designers while making it easier for millions. of users who do not design. professionals to create 3D content.

Neural graphics is a new field blending AI and graphics to create an accelerated graphics pipeline that learns from data. Integrating AI improves results, helps automate design choices, and opens up new, previously unimagined opportunities for artists and creators. Neural graphics will redefine how virtual worlds are created, simulated and experienced by users.

These SDKs and research contribute to every stage of the content creation pipeline, including:

3D content creation

- Kaolin Wisp – an addition to Kaolin, a PyTorch library enabling faster 3D deep learning research by reducing the time needed to test and implement new techniques from weeks to days. Kaolin Wisp is a research-focused library for neural fields, establishing a common suite of tools and a framework to accelerate new research in neural fields.

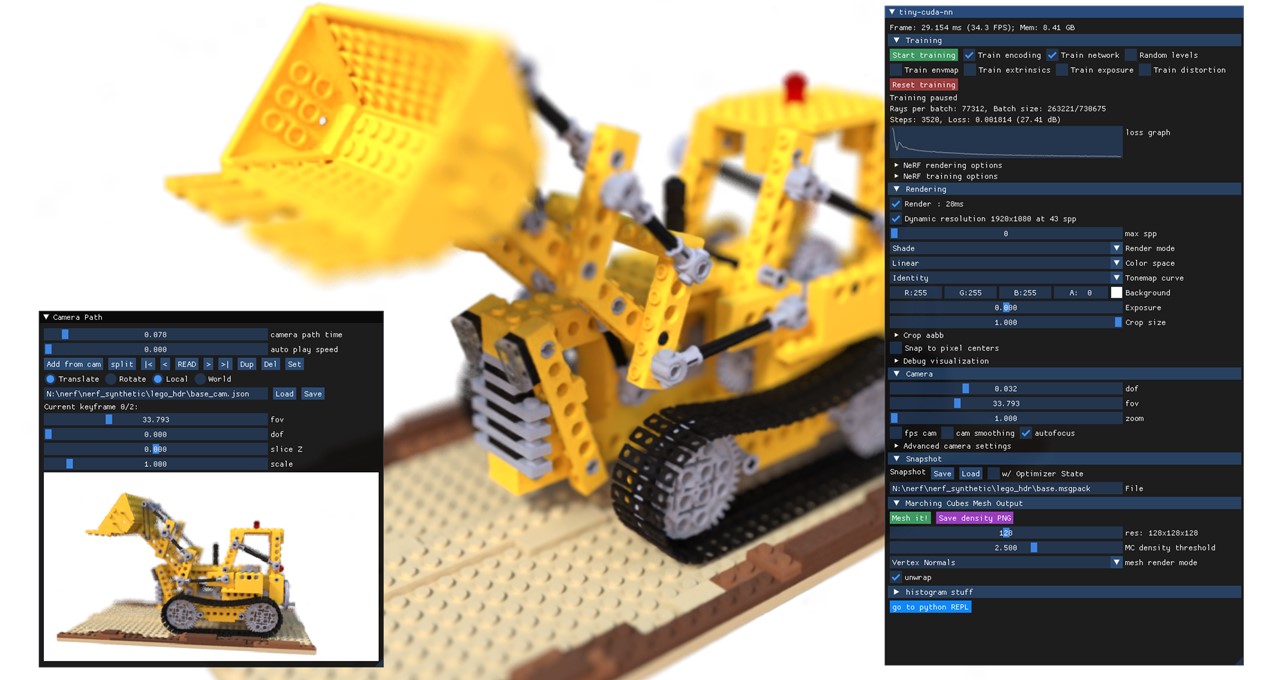

- Instant Neural Graph Primitives – a new approach to capturing the shape of real-world objects, and the inspiration behind NVIDIA Instant NeRF, an inverse rendering model that transforms a collection of still images into a digital 3D scene. This technique and associated GitHub code speed up the process up to 1000 times.

- MoMa 3D – a new reverse rendering pipeline that allows users to quickly import a 2D object into a graphics engine to create a 3D object that can be modified with realistic materials, lighting, and physics.

- GauGAN360 – the next evolution of NVIDIA GauGAN, an AI model that transforms crude scribbles into photorealistic masterpieces. GauGAN360 generates 8K 360 degree panoramas that can be ported into Omniverse scenes.

- Omniverse Avatar Cloud Engine (ACE) – a new collection of cloud APIs, microservices and tools to build, customize and deploy digital human applications. ACE is based on NVIDIA’s Unified Computing Framework, allowing developers to seamlessly integrate NVIDIA’s core AI technologies into their avatar applications.

Physics and Animation

- NeuralVDB – a revolutionary enhancement to OpenVDB, the current industry standard for volumetric data storage. Using machine learning, NeuralVDB introduces compact neural representations, dramatically reducing the memory footprint to enable higher resolution 3D data.

- Omniverse Audio2Face – an artificial intelligence technology that generates an expressive facial animation from a single audio source. It is useful for real-time interactive applications and as a traditional facial animation creation tool.

- ASE: Integration of Animation Skills – an approach allowing physically simulated characters to act more responsively and realistically in unfamiliar situations. It uses deep learning to teach characters how to respond to new tasks and actions.

- CAT toolbox – a framework that allows users to create an accurate and powerful pose estimation model, which can estimate what a person might be doing in a scene using computer vision much faster than current methods.

Live

- Image Features Eye Tracking – a research model linking the rendering quality of the pixels to the reaction time of the user. By predicting the best combination of render quality, display properties, and display conditions for the least latency, it will enable better performance in fast-paced interactive computer graphics applications such as competitive games.

- Holographic glasses for virtual reality – a collaboration with Stanford University on a new VR goggle design that delivers full-color 3D holographic images in a revolutionary 2.5mm-thick optical stack.

Rejoin NVIDIA at SIGGRAPH to learn about the latest breakthroughs in research and technology in the fields of graphics, AI and virtual worlds. Discover the latest innovations from NVIDIA Researchand access the full suite of NVIDIA SDKs, Tools and Libraries.